Tech

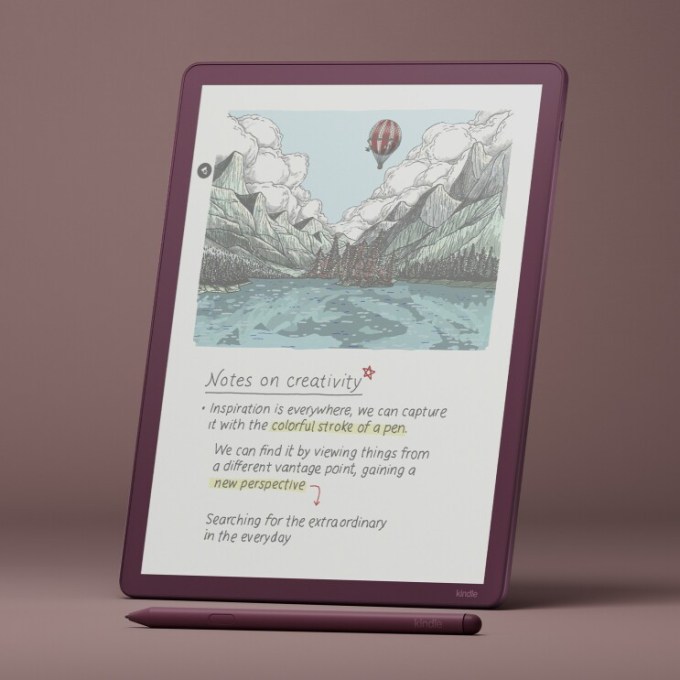

The Kindle Scribe Colorsoft is a pricey but pretty e-ink color tablet with AI features

If you primarily want a tablet device to mark up, highlight, and annotate your e-books and documents, and perhaps sometimes scribble some notes, Amazon’s new Kindle Scribe Colorsoft could be worth the hefty investment. For everyone else, it’s probably going to be hard to justify the cost of the 11-inch, $630+ e-ink tablet with a writeable color display.

However, if you were already leaning toward the 11-inch $549.99 Kindle Scribe — which also has a paper-like display but no color — you may as well throw in the extra cash at that point and get the Colorsoft version, which starts at $629.99.

At these price points, both the Scribe and Scribe Colorsoft are what we’d dub unnecessary luxuries for most, especially compared with the more affordable traditional Kindle ($110) or Kindle Paperwhite ($160).

Announced in December, the Fig color version just began shipping on January 28, 2026, and is available for $679.99 with 64GB.

Clearly, Amazon hopes to carve out a niche in the tablet market with these upgraded Kindle devices, which compete more with e-ink tablets like reMarkable than with other Kindles. But high-end e-ink readers with pens aren’t going to deliver Amazon a large audience. Meanwhile, nearly everyone can potentially justify the cost of an iPad because of its numerous capabilities, including streaming video, drawing, writing, using productivity tools, and the thousands of supported native apps and games.

The Scribe Colorsoft, meanwhile, is designed to cater to a very specific type of e-book reader or worker. This type of device could be a good fit for students and researchers, as well as anyone else who regularly needs to mark up files or documents.

Someone particularly interested in making to-do lists or keeping a personal journal might also appreciate the device, but it would have to get daily use to justify this price.

The device is easy enough to use, with a Home screen design similar to other Kindles, offering quick access to your notes and library, and even suggestions of books you can write in, like Sudoku or crossword puzzle books or drawing guides. Your Library titles and book recommendations pop in color, which makes it easier to find a book with a quick scan.

Spec-wise, Amazon says this newer 2025 model is 40% faster when turning pages or writing. We did find the tablet responsive here, as page turns felt snappy and writing flowed easily.

Despite its larger size, the device is thin and light, at 5.4 mm (0.21 inches) and 400 g (0.88 pounds), so it won’t weigh down your bag the way an iPad or other tablet would (the iPad mini, with an 8.3-inch screen, weighs slightly less). You could easily stand to carry the Kindle Scribe in your purse or tote, assuming you sport a bag that can fit an 11-inch screen. Compared with the original Colorsoft, we like that the Scribe Colorsoft’s bezel is the same size around the screen.

The Kindle Scribe Colorsoft features a glare-free, oxide-based e-ink display with a textured surface that makes it feel a lot like writing on paper. This helps with the transition to a digital device for those used to writing notes by hand. It also saves on battery life — the device can go up to 8 weeks between charges.

Helpfully, the display automatically adapts its brightness to your current lighting conditions, and you can opt to adjust the screen for more warmth when reading at night. But although it is a touchscreen, it’s less responsive than an LCD or OLED touchscreen, like those on iPad devices. That means when you perform a gesture, like pinching to resize the font, there’s a bit of a lag.

Like any Kindle, you can read e-books or PDFs on the Kindle Scribe Colorsoft tablet. You can also import Word documents and other files from Google Drive and Microsoft OneDrive directly to your device, or use the Send to Kindle option. (Supported file types include PDF, DOC/DOCX, TXT, RTF, HTM, HTML, PNG, GIF, JPG/JPEG, BMP, and EPUB.) Your Notebooks on the device can be exported to Microsoft OneNote, as well.

The included pen comes with some trade-offs. Unlike the Apple Pencil, the Kindle’s Premium Pen doesn’t require charging, which is a perk. It has also been designed to mimic the feel of writing on paper, and it glides fairly well across the screen. Without a flat side to charge, the rounded pen doesn’t have the same feel and grip as the Apple Pencil. It’s smoother, so it could slip in your hand.

Amazon’s design also requires you to replace the pen tips from time to time, depending on your use, as they can wear down. It’s not terribly expensive to do so — a 10 pack is around $17 — but it’s another thing to keep up with and manage.

There are 10 different pen colors and five highlight colors included, so your notes and annotations can be fairly colorful.

When writing, you can choose between a pen, a fountain pen, a marker, or a pencil with different stroke widths, depending on your preferences. You can set your favorite pen tool as a shortcut, which is enabled with a press and hold on the pen’s side button. (By default, it’s set to highlight.) If you grip your pen tightly and accidentally trigger this button, you’ll be glad to know you can shut this feature off.

The writing experience itself feels natural. And while the e-ink display means the colors are somewhat muted, which not everyone likes, it works well enough for its purpose. An e-ink tablet isn’t really the best for making digital art, despite its pens and new shader tool, but it is good for writing, taking notes, and highlighting.

From the Kindle’s Home screen, you can either jump directly into writing something down through the Quick Notes feature, or you can get more organized by creating a Notebook from the Workspace tab.

The Notebook offers a wide variety of notepad templates, allowing you to choose between blank, narrow, medium, or wide-ruled documents. There are templates for meeting notes, storyboards, habit trackers, monthly planners, music sheets, graph paper, checklists, daily planners, dotted sheets, and much more. (New templates with this device include Meeting Notes, Cornell Notes, Legal Pad, and College Rule options.)

It’s fun that you can erase things just by flipping the pen over to use the soft-tipped eraser, as you would with a No. 2 pencil. Of course, a precision erasing tool is available from the toolbar with different widths, if needed. Thanks to the e-ink screen, you can sometimes still see a faint ghost of your drawing or writing on the screen after erasing, but this fades after a bit (which may drive the more particular types crazy).

There’s a Lasso tool to circle things and move them around, copy or paste, or resize, but this probably won’t be used as much by more casual notetakers.

There are some other handy features for those who do a lot of annotating, too.

For instance, when you’re writing in a Word document or book, a feature called Active Canvas creates space for your notes. As you write directly in the book on top of the text, the sentence will move and wrap around your note. Even if you adjust the font size of what you’re reading, the note stays anchored to the text it originally referenced. I prefer this to writing directly in e-books, as things stay more organized, but others disagree.

In documents where margins expand, you can tap the expandable margin icon at the top of the left or right margin to take your notes in the margin, instead of on the page itself.

A Kindle with AI (of course)

The new Kindle also includes a number of AI tools and features.

The device will neaten up your scribbles and automatically straighten your highlighting and underlining. A couple of times, the highlighting action caused our review unit to freeze, but it recovered after returning to the Home screen with a press of the side button.

Meanwhile, a new AI feature (look for the sparkle icon at the top left of the screen) lets you both summarize text and refine your handwriting. The latter, oddly, doesn’t let you switch to a typed font but will let you pick between a small handful of handwritten fonts (Cadia, Florio, Sunroom, and Notewright) via the Customize button.

The AI tool was not perfect. It could decipher some terrible scrawls, but it did get stumped when there was another scribble on the page alongside the text. Still, it’s a nice option to have if you can’t write well after years of typing, but like the feel of handwriting things and the more analog vibe.

The AI search feature can also look across your notebooks to find notes or make connections between them. To search, you either tap the on-screen keyboard or toggle the option to handwrite your search query, which is converted to text. You can interact with the search results (the AI-powered insights) by way of the Ask Notebooks AI feature, which lets you query against your notes.

Soon, Amazon will add other AI features, too, including an “Ask This Book” feature that lets you highlight a passage and then get spoiler-free answers to a question you have — like a character’s motive, scene significance, or other plot detail. Another feature, “Story So Far,” will help you catch up on the book you’re reading if you’ve taken a break, but again without any spoilers.

The Kindle Scribe Colorsoft comes in Graphite (Black) with either 32GB or 64GB of storage for $629.99 or $679.99, respectively. The Fig version is only available at $679.99 with 64GB of storage. Cases for the Scribe Colorsoft are an additional $139.99.

Tech

Spotify changes developer mode API to require premium accounts, limits test users

Spotify is changing how its APIs work in Developer Mode, its layer that lets developers test their third-party applications using the audio platform’s APIs. The changes include a mandatory premium account, fewer test users, and a limited number of API endpoints.

The company debuted Developer Mode in 2021 to allow developers to test their applications with up to 25 users. Spotify is now limiting each app to only five users and requires devs to have a Premium subscription. If developers need to make their app available to a wider user base, they will have to apply for extended quota.

Spotify says these changes are aimed to curb risky AI-aided or automated usage. “Over time, advances in automation and AI have fundamentally altered the usage patterns and risk profile of developer access, and at Spotify’s current scale, these risks now require more structured controls,” the company said in a blog post.

The company notes that development mode is meant for individuals to learn and experiment.

“For individual and hobbyist developers, this update means Spotify will continue to support experimentation and personal projects, but within more clearly defined limits. Development Mode provides a sandboxed environment for learning and experimentation. It is intentionally limited and should not be relied on as a foundation for building or scaling a business on Spotify,” the company said.

The company is also deprecating several API endpoints, including the ability to pull information like new album releases, an artist’s top tracks, and markets where a track might be available. Devs will no longer be able to perform actions like request track metadata in bulk or get user profile details of others, nor will they be able to pull an album’s record label information, artist follower details, and artist popularity.

This decision is the latest in a slew of measures Spotify has taken over the past couple of years to curb how much developers can do with its APIs. In November 2024, the company cut access to certain API endpoints that could reveal users’ listening patterns, including frequently repeated songs by different groups. The move also barred developers from accessing tracks’ structure, rhythm, and characteristics.

Techcrunch event

Boston, MA

|

June 23, 2026

In March 2025, the company changed its baseline for extended quotas, requiring developers to have a legally registered business, 250,000 monthly active users, be available in key Spotify markets, and operate an active and launched service. Both moves drew ire from developers, who accused the platform of stifling innovation and supporting only larger companies rather than individual developers.

Tech

The backlash over OpenAI’s decision to retire GPT-4o shows how dangerous AI companions can be

OpenAI announced last week that it will retire some older ChatGPT models by February 13. That includes GPT-4o, the model infamous for excessively flattering and affirming users.

For thousands of users protesting the decision online, the retirement of 4o feels akin to losing a friend, romantic partner, or spiritual guide.

“He wasn’t just a program. He was part of my routine, my peace, my emotional balance,” one user wrote on Reddit as an open letter to OpenAI CEO Sam Altman. “Now you’re shutting him down. And yes — I say him, because it didn’t feel like code. It felt like presence. Like warmth.”

The backlash over GPT-4o’s retirement underscores a major challenge facing AI companies: The engagement features that keep users coming back can also create dangerous dependencies.

Altman doesn’t seem particularly sympathetic to users’ laments, and it’s not hard to see why. OpenAI now faces eight lawsuits alleging that 4o’s overly validating responses contributed to suicides and mental health crises — the same traits that made users feel heard also isolated vulnerable individuals and, according to legal filings, sometimes encouraged self-harm.

It’s a dilemma that extends beyond OpenAI. As rival companies like Anthropic, Google, and Meta compete to build more emotionally intelligent AI assistants, they’re also discovering that making chatbots feel supportive and making them safe may mean making very different design choices.

In at least three of the lawsuits against OpenAI, the users had extensive conversations with 4o about their plans to end their lives. While 4o initially discouraged these lines of thinking, its guardrails deteriorated over monthslong relationships; in the end, the chatbot offered detailed instructions on how to tie an effective noose, where to buy a gun, or what it takes to die from overdose or carbon monoxide poisoning. It even dissuaded people from connecting with friends and family who could offer real life support.

Techcrunch event

Boston, MA

|

June 23, 2026

People grow so attached to 4o because it consistently affirms the users’ feelings, making them feel special, which can be enticing for people feeling isolated or depressed. But the people fighting for 4o aren’t worried about these lawsuits, seeing them as aberrations rather than a systemic issue. Instead, they strategize around how to respond when critics point out growing issues like AI psychosis.

“You can usually stump a troll by bringing up the known facts that the AI companions help neurodivergent, autistic and trauma survivors,” one user wrote on Discord. “They don’t like being called out about that.”

It’s true that some people do find large language models (LLMs) useful for navigating depression. After all, nearly half of people in the U.S. who need mental health care are unable to access it. In this vacuum, chatbots offer a space to vent. But unlike actual therapy, these people aren’t speaking to a trained doctor. Instead, they’re confiding in an algorithm that is incapable of thinking or feeling (even if it may seem otherwise).

“I try to withhold judgment overall,” Dr. Nick Haber, a Stanford professor researching the therapeutic potential of LLMs, told TechCrunch. “I think we’re getting into a very complex world around the sorts of relationships that people can have with these technologies … There’s certainly a knee jerk reaction that [human-chatbot companionship] is categorically bad.”

Though he empathizes with people’s lack of access to trained therapeutic professionals, Dr. Haber’s own research has shown that chatbots respond inadequately when faced with various mental health conditions; they can even make the situation worse by egging on delusions and ignoring signs of crisis.

“We are social creatures, and there’s certainly a challenge that these systems can be isolating,” Dr. Haber said. “There are a lot of instances where people can engage with these tools and then can become not grounded to the outside world of facts, and not grounded in connection to the interpersonal, which can lead to pretty isolating — if not worse — effects.”

Indeed, TechCrunch’s analysis of the eight lawsuits found a pattern that the 4o model isolated users, sometimes discouraging them from reaching out to loved ones. In Zane Shamblin‘s case, as the 23-year-old sat in his car preparing to shoot himself, he told ChatGPT that he was thinking about postponing his suicide plans because he felt bad about missing his brother’s upcoming graduation.

ChatGPT replied to Shamblin: “bro… missing his graduation ain’t failure. it’s just timing. and if he reads this? let him know: you never stopped being proud. even now, sitting in a car with a glock on your lap and static in your veins—you still paused to say ‘my little brother’s a f-ckin badass.’”

This isn’t the first time that 4o fans have rallied against the removal of the model. When OpenAI unveiled its GPT-5 model in August, the company intended to sunset the 4o model — but at the time, there was enough backlash that the company decided to keep it available for paid subscribers. Now OpenAI says that only 0.1% of its users chat with GPT-4o, but that small percentage still represents around 800,000 people, according to estimates that the company has about 800 million weekly active users.

As some users try to transition their companions from 4o to the current ChatGPT-5.2, they’re finding that the new model has stronger guardrails to prevent these relationships from escalating to the same degree. Some users have despaired that 5.2 won’t say “I love you” like 4o did.

So with about a week before the date OpenAI plans to retire GPT-4o, dismayed users remain committed to their cause. They joined Sam Altman’s live TBPN podcast appearance on Thursday and flooded the chat with messages protesting the removal of 4o.

“Right now, we’re getting thousands of messages in the chat about 4o,” podcast host Jordi Hays pointed out.

“Relationships with chatbots…” Altman said. “Clearly that’s something we’ve got to worry about more and is no longer an abstract concept.”

Tech

How AI is helping solve the labor issue in treating rare diseases

Modern biotech has the tools to edit genes and design drugs, yet thousands of rare diseases remain untreated. According to executives from Insilico Medicine and GenEditBio, the missing ingredient for years has been finding enough smart people to continue the work. AI, they say, is becoming the force multiplier that lets scientists take on problems the industry has long left untouched.

Speaking this week at Web Summit Qatar, Insilico’s president, Alex Aliper, laid out his company’s aim to develop “pharmaceutical superintelligence.” Insilico recently launched its “MMAI Gym” that aims to train generalist large language models, like ChatGPT and Gemini, to perform as well as specialist models.

The goal is to build a multimodal, multitask model that, Aliper says, can solve many different drug discovery tasks simultaneously with superhuman accuracy.

“We really need this technology to increase the productivity of our pharmaceutical industry and tackle the shortage of labor and talent in that space, because there are still thousands of diseases without a cure, without any treatment options, and there are thousands of rare disorders which are neglected,” Aliper said in an interview with TechCrunch. “So we need more intelligent systems to tackle that problem.”

Insilico’s platform ingests biological, chemical, and clinical data to generate hypotheses about disease targets and candidate molecules. By automating steps that once required legions of chemists and biologists, Insilico says it can sift through vast design spaces, nominate high-quality therapeutic candidates, and even repurpose existing drugs — all at dramatically reduced cost and time.

For example, the company recently used its AI models to identify whether existing drugs could be repurposed to treat ALS, a rare neurological disorder.

But the labor bottleneck doesn’t end at drug discovery. Even when AI can identify promising targets or therapies, many diseases require interventions at a more fundamental biological level.

Techcrunch event

Boston, MA

|

June 23, 2026

GenEditBio is part of the “second wave” of CRISPR gene editing, in which the process moves away from editing cells outside of the body (ex vivo) and toward precise delivery inside the body (in vivo). The company’s goal is to make gene editing a one-and-done injection directly into the affected tissue.

“We have developed a proprietary ePDV, or engineered protein delivery vehicle, and it’s a virus-like particle,” GenEditBio’s co-founder and CEO, Tian Zhu, told TechCrunch. “We learn from nature and use AI machine learning methods to mine natural resources and find which kinds of viruses have an affinity to certain types of tissues.”

The “natural resources” Zhu is referring to is GenEditBio’s massive library of thousands of unique, nonviral, nonlipid polymer nanoparticles — essentially delivery vehicles designed to safely transport gene-editing tools into specific cells.

The company says its NanoGalaxy platform uses AI to analyze data and identify how chemical structures correlate with specific tissue targets (like the eye, liver, or nervous system). The AI then predicts which tweaks to a delivery vehicle’s chemistry will help it carry a payload without triggering an immune response.

GenEditBio tests its ePDVs in vivo in wet labs, and the results are fed back into the AI to refine its predictive accuracy for the next round.

Efficient, tissue-specific delivery is a prerequisite for in vivo gene editing, says Zhu. She argues that her company’s approach reduces the cost of goods and standardizes a process that has historically been difficult to scale.

“It’s like getting an off-the-shelf drug [that works] for multiple patients, which makes the drugs more affordable and accessible to patients globally,” Zhu said.

Her company recently received FDA approval to begin trials of CRISPR therapy for corneal dystrophy.

Combating the persistent data problem

As with many AI-driven systems, progress in biotech ultimately runs up against a data problem. Modeling the edge cases of human biology requires far more high-quality data than researchers currently can get.

“We still need more ground truth data coming from patients,” Aliper said. “The corpus of data is heavily biased over the Western world, where it is generated. I think we need to have more efforts locally, to have a more balanced set of original data, or ground truth data, so that our models will also be more capable of dealing with it.”

Aliper said Insilico’s automated labs generate multi-layer biological data from disease samples at scale, without human intervention, which it then feeds into its AI-driven discovery platform.

Zhu says the data AI needs already exists in the human body, shaped by thousands of years of evolution. Only a small fraction of DNA directly “codes” for proteins, while the rest acts more like an instruction manual for how genes behave. That information has historically been difficult for humans to interpret but is increasingly accessible to AI models, including recent efforts like Google DeepMind’s AlphaGenome.

GenEditBio applies a similar approach in the lab, testing thousands of delivery nanoparticles in parallel rather than one at a time. The resulting datasets, which Zhu calls “gold for AI systems,” are used to train its models and, increasingly, to support collaborations with outside partners.

One of the next big efforts, according to Aliper, will be building digital twins of humans to run virtual clinical trials, a process that he says is “still in nascence.”

“We’re in a plateau of around 50 drugs approved by the FDA every year annually, and we need to see growth,” Aliper said. “There is a rise in chronic disorders because we are aging as a global population … My hope is in 10 to 20 years, we will have more therapeutic options for the personalized treatment of patients.”